02:00

Identifying Correct or Incorrect Emotion Recognition from Facial Expression Time Series using

Introduction

Facial Expression Recognition has advanced significantly with the emergence of automatic emotion recognition systems.

However, the outputs of these systems can be difficult to handle:

- The sheer volume of data from high-frequency time recordings,

- The presence of autocorrelation over time,

- The inclusion of multiple variables.

In this workshop, we will explore how to process and analyse such data using R.

Case study

78 participants (20 males, 58 females) have been recorded while they were asked to express six emotions and constitute de BU-4DFE database (Yin et al., 2008).

A total of 467 video recordings were obtained (one participant completed only five videos) and analysed using Affectiva’s Affedex Emotion Recognition system integrated within the iMotions Lab software.

Each video frame was scored for the likelihood of expressing the following emotions: Happiness, Surprise, Disgust, Fear, Sadness, and Anger, with values from 0 (not recognised) to 1 (fully recognised).

Did the participants express the emotion intended by the instructions?

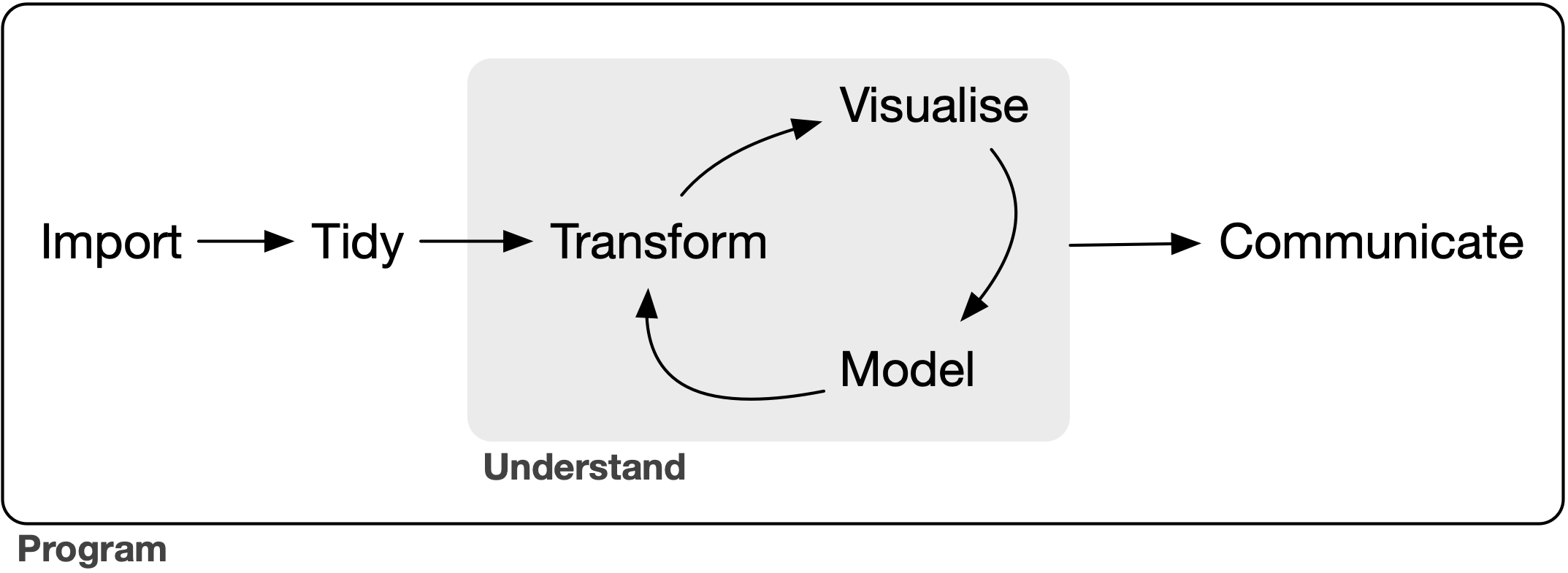

Objectives

- Import, Tidy and Transform

- Visualise

- Model

- Communicate

Technological Choices

not

There is little difference between R and Python for research purposes, but R is, in my view, easier to read and write.

This workshop assumes some familiarity with R, particularly:

- The “tidyverse” coding style

- Use of the native pipe operator

|>rather than the%>%pipe from the {magrittr} package

Note

The pipe operator applies the object on the left-hand side to the first argument of the function on the right-hand side.

So instead of writing f(arg1 = x, arg2 = y), you write x |> f(arg2 = y).

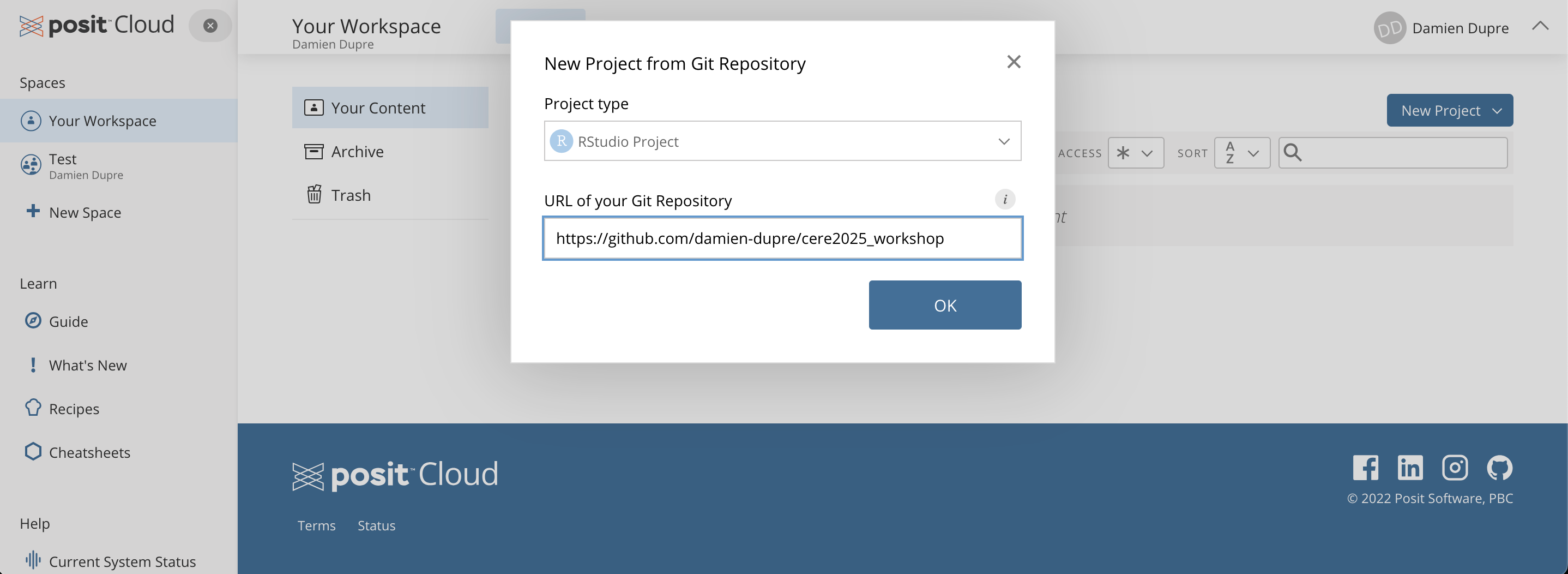

Using RStudio in Posit Cloud

Although you may use your own R installation, there are excellent and free cloud-based options:

- Google Colab with Jupyter Notebook

- GitHub Codespaces with Visual Studio Code

- Posit Cloud with RStudio

Warning

The free tier on Posit Cloud provides only 25 hours of usage per month.

🛠️ Now, it’s Your Turn!

- In your browser, sign up or log in at https://posit.cloud

- Click on New Project and choose New Project from Git Repository

- Enter

https://github.com/damien-dupre/cere2025_workshopwhen prompted for the repository URL

Ressources

- All 467 csv files are in the

data/folder - All R scripts used are in the

scripts/folder - Slides and supplementary material are in the

output/folder

cere2025_workshop/ folder structure

cere2025_workshop/

├── data/

│ ├── F001_Angry.csv

│ ├── F001_Disgust.csv

│ └── ...

├── scripts/

│ ├── 1_import_tidy_transform.R

│ ├── 2_visualise.R

│ ├── 3_model.R

│ └── 4_communicate.R

└── output/

├── slides.html

├── slides.qmd

├── slides_files/

└── ...1. Import, Tidy and Transform

Import

Start by installing the necessary packages:

install.packages("tidyverse") # Metapackage for data transformation and visualisation

install.packages("fs") # Manipulate files' and folders' path

install.packages("here") # Rebase the origin of the repository regardless of the system

install.packages("report") # Standardize the output of statistical modelsAnd load them:

Import

We will combine all .csv files into a single data frame:

Preview the 5 first rows of the df object:

# A tibble: 5 × 8

source frame anger disgust fear happiness sadness surprise

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 /Users/damienhome/Dr… 1 2 e-5 0.00426 4.6 e-5 0.000018 2.4 e-4 0.00194

2 /Users/damienhome/Dr… 2 2.00e-5 0.00426 4.60e-5 0.0000180 2.39e-4 0.00194

3 /Users/damienhome/Dr… 3 2.00e-5 0.00426 4.60e-5 0.0000180 2.40e-4 0.00194

4 /Users/damienhome/Dr… 4 2.00e-5 0.00426 4.60e-5 0.0000180 2.40e-4 0.00194

5 /Users/damienhome/Dr… 5 2.00e-5 0.00426 4.60e-5 0.0000180 2.39e-4 0.00194Tidy and Transform

We will update the source variable to retain only the file name:

Importantly, we need to transform this wide dataframe (i.e., all emotion variables are side by side) to a long dataframe (i.e., all emotion variables are below each others and only one “value” variable is used):

Tidy and Transform

The df_tidy_long object has 280200 rows, let’s look at its first rows:

# A tibble: 10 × 6

frame file ppt instruction emotion recognition

<dbl> <chr> <chr> <chr> <chr> <dbl>

1 1 F001_Angry F001 Angry anger 0.00002

2 1 F001_Angry F001 Angry disgust 0.00426

3 1 F001_Angry F001 Angry fear 0.000046

4 1 F001_Angry F001 Angry happiness 0.000018

5 1 F001_Angry F001 Angry sadness 0.00024

6 1 F001_Angry F001 Angry surprise 0.00194

7 2 F001_Angry F001 Angry anger 0.0000200

8 2 F001_Angry F001 Angry disgust 0.00426

9 2 F001_Angry F001 Angry fear 0.0000460

10 2 F001_Angry F001 Angry happiness 0.0000180🛠️ Now, it’s Your Turn!

- Open “1_import_tidy_transform.R” in the

scripts/folder - Select all the lines and click on the Run icon (top left)

- Observe your

df_tidy_longobject

Note

Instead of clicking on the Run you can also press:

- CTRL + ENTER (Windows)

- Command + ENTER (Mac)

02:00

2. Visualise

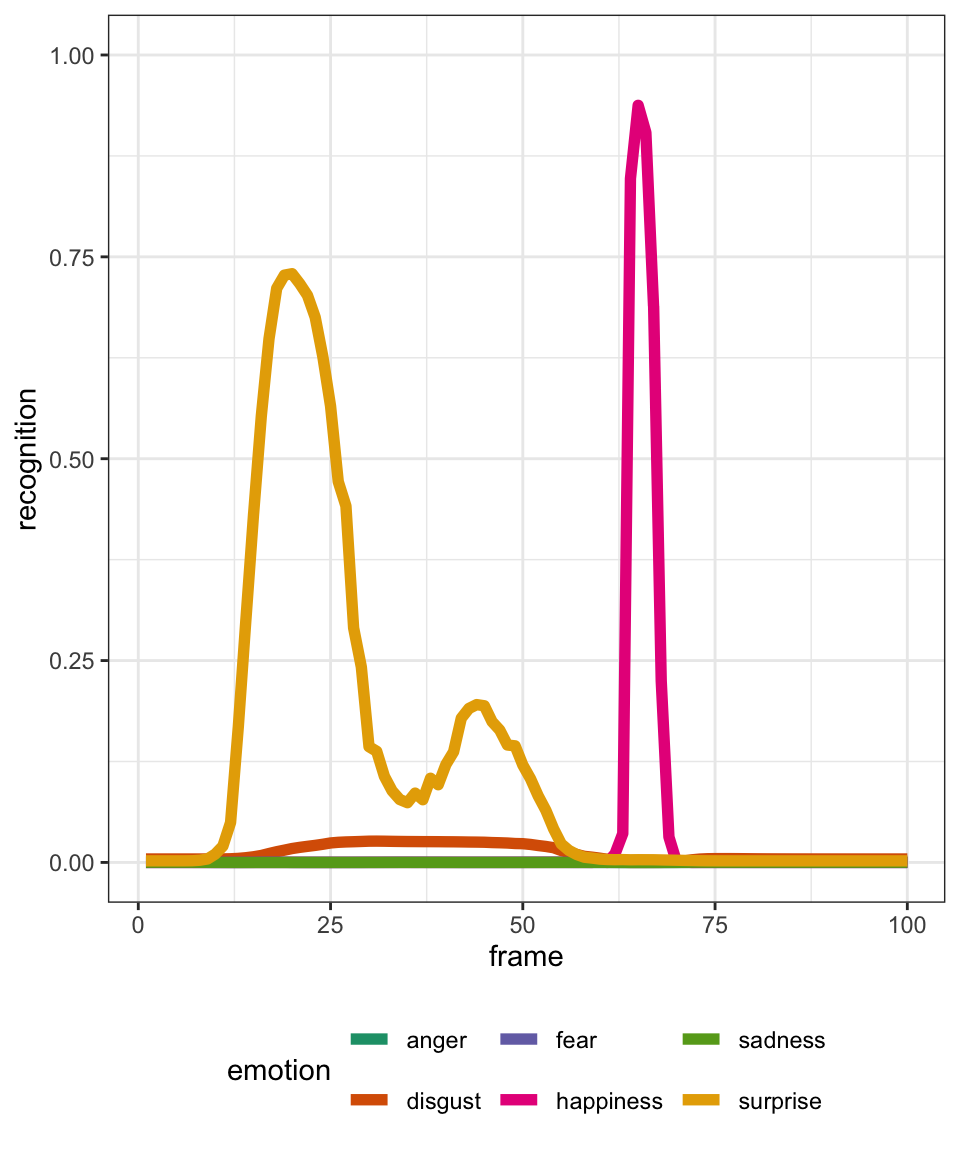

Single Recording

Let’s visualise a single video:

list_file <- unique(df_tidy_long$file)

df_tidy_long |>

filter(file == list_file[3]) |>

ggplot() +

aes(

x = frame,

y = recognition,

colour = emotion

) +

geom_line(linewidth = 2) +

theme_bw() +

theme(legend.position = "bottom") +

scale_y_continuous(limits = c(0, 1)) +

scale_color_brewer(palette = "Dark2")

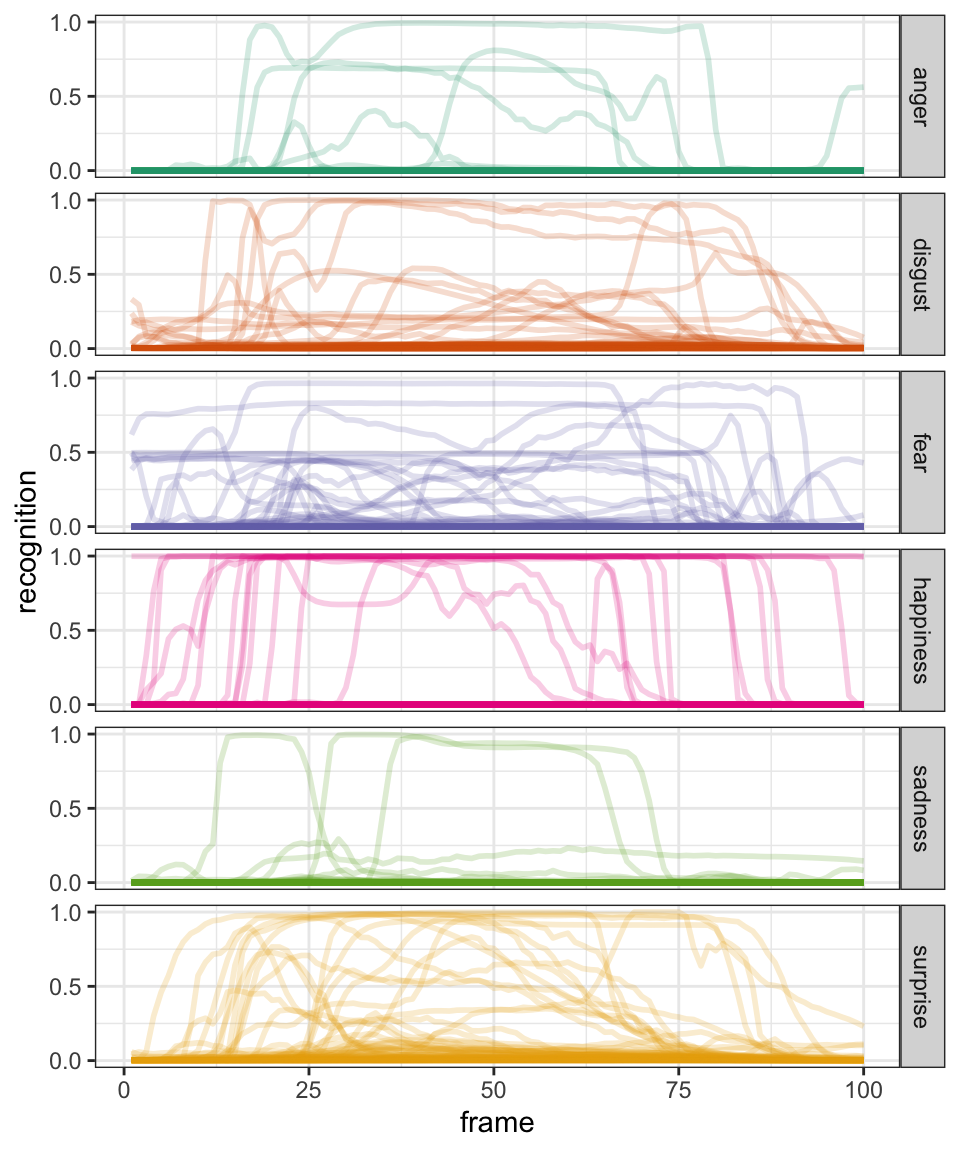

All Recordings for 1 Task

Let’s visualise recordings for one instruction task:

list_task <- unique(df_tidy_long$instruction)

df_tidy_long |>

filter(instruction == list_task[3]) |>

ggplot() +

aes(

x = frame,

y = recognition,

group = ppt,

colour = emotion

) +

geom_line(linewidth = 1, alpha = 0.2) +

facet_grid(emotion ~ ., switch = "x") +

theme_bw() +

theme(legend.position = "bottom") +

scale_y_continuous(breaks = c(0, 0.5, 1)) +

scale_color_brewer(palette = "Dark2") +

guides(colour = "none")

🛠️ Now, it’s Your Turn!

- Open “2_visualise.R” in the

scripts/folder - Change the index numbers in the first two lines to explore different data

- Select all lines and click Run (or use keyboard shortcuts)

Warning

The code in the script “1_import_tidy_transform.R” must have been ran before running the code in the script “2_visualise.R”.

02:00

3. Model

Model

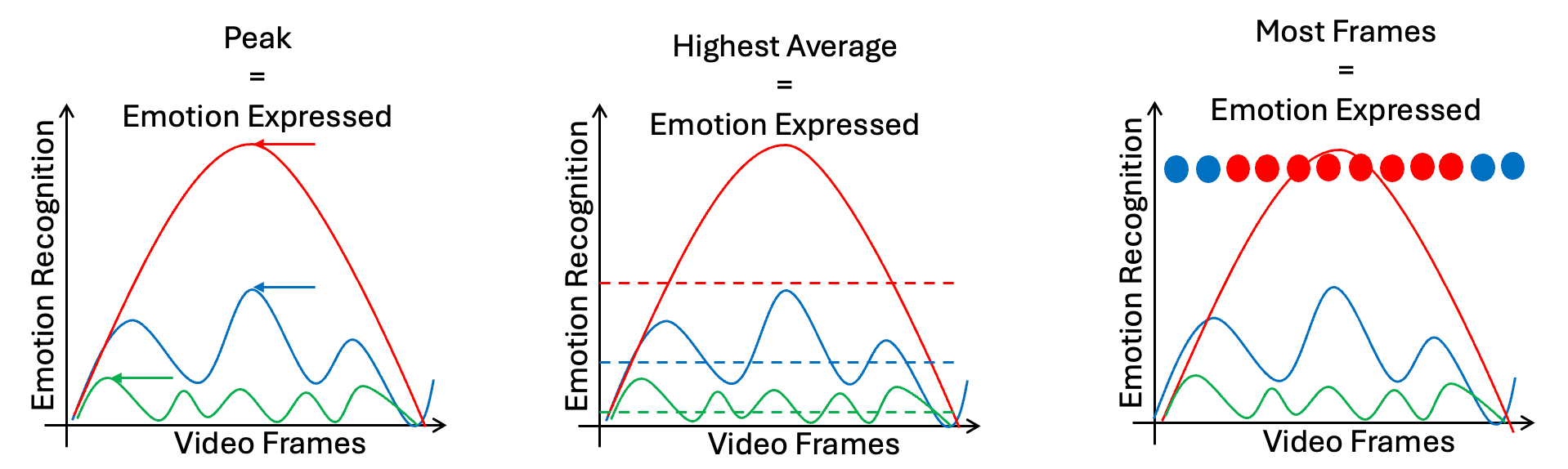

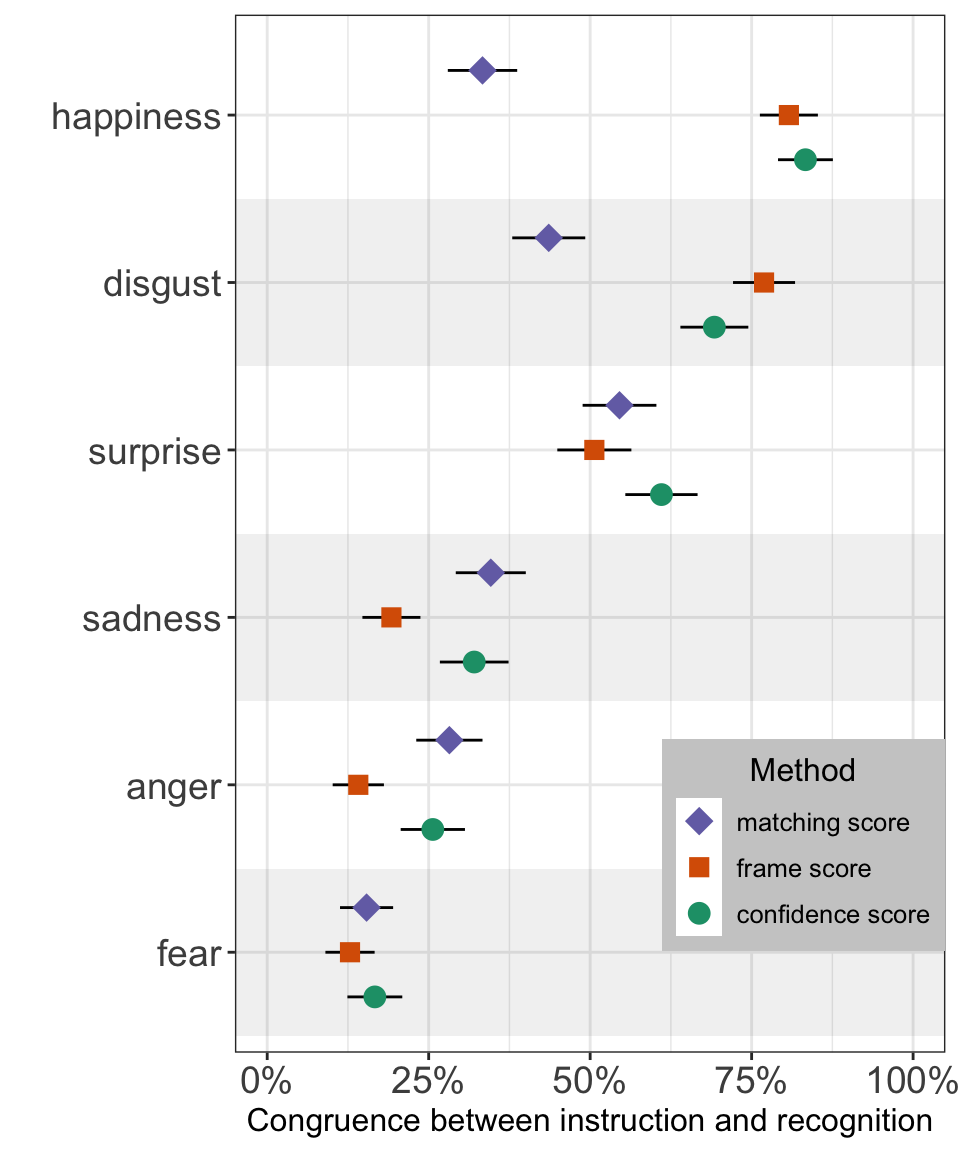

For each video, we aim to identify the expressed emotion using three distinct methods (as described in Dupré, 2021):

- Matching Score: Emotion with the highest single value

- Confidence Score: Emotion with the highest average value

- Frame Score: Emotion most frequently recognised across frames

Model

Here is a visual representation of each method applied to a special case in which all methods return the same emotion recognised.

Model

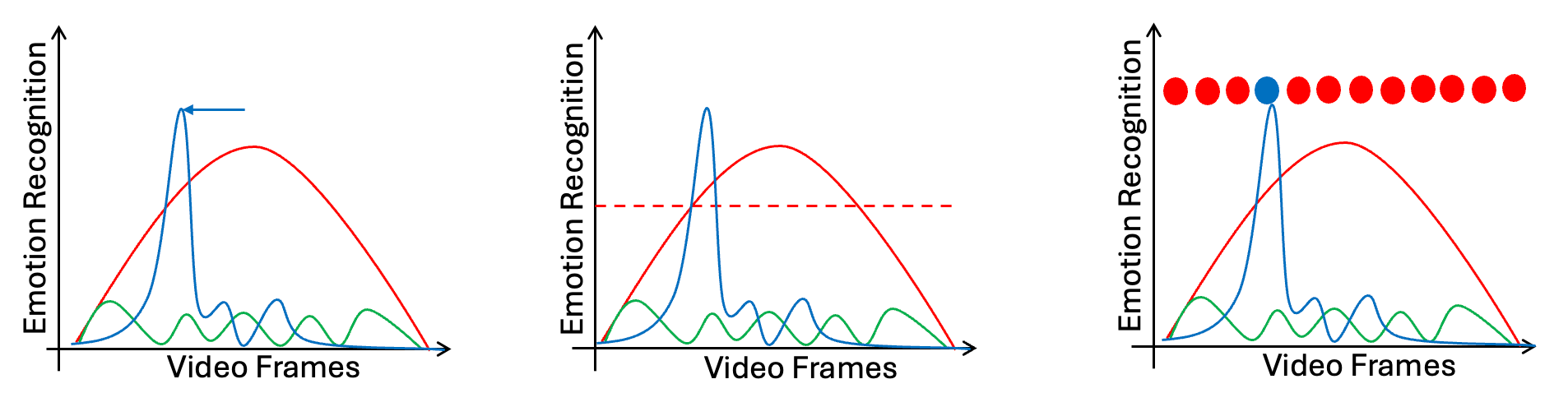

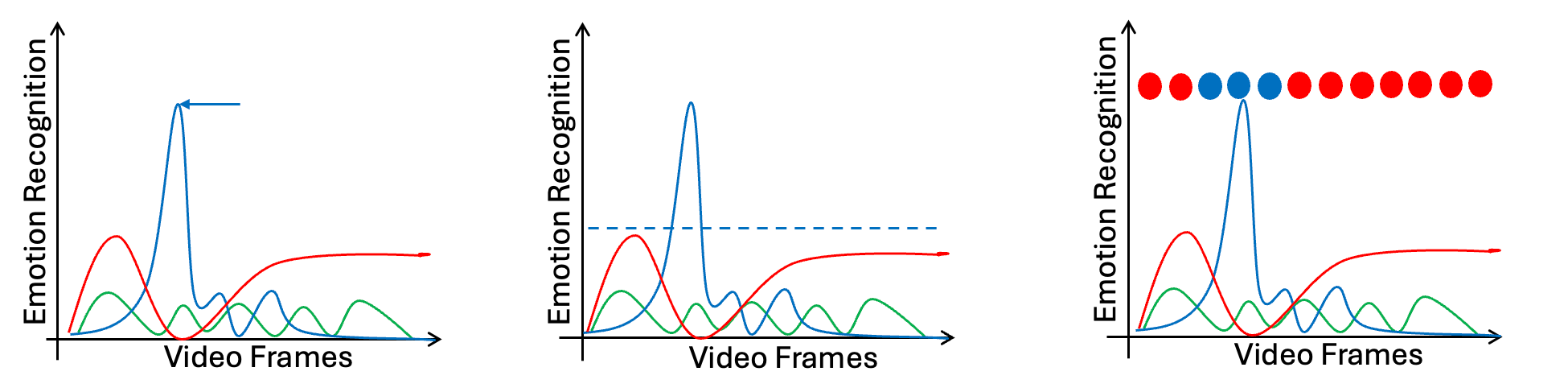

However, some cases are returning different results:

Disclaimer

Each of these methods has its advantages and disadvantages. For instance, Confidence Score and Frame Score may offer greater robustness against artefacts.

There may also be alternative calculation methods not included in this discussion.

Lastly, assigning a single label to an entire video is a simplification that could be questioned, though this issue lies outside the scope of the workshop.

Matching Score

The emotion recognised is the one having the highest value in the recording

df_score_matching <- df_tidy_long |>

# keep only the frame with the highest value in each ties

group_by(file) |>

filter(recognition == max(recognition)) |>

# in case of ties, label the emotions "undetermined" and remove duplicates

add_count() |>

mutate(emotion = case_when(n != 1 ~ "undetermined", .default = emotion)) |>

select(file, emotion) |>

distinct() |>

# label the method

mutate(method = "matching score")Confidence Score

The emotion recognised is the one with the highest average along all the recording among the possible emotions

df_score_confidence <- df_tidy_long |>

# calculate the average value for each emotion in each file and keep the highest

group_by(file, emotion) |>

summarise(mean_emotion = mean(recognition, na.rm = TRUE)) |>

slice_max(mean_emotion) |>

# in case of ties, label the emotions "undetermined" and remove duplicates

add_count() |>

mutate(emotion = case_when(n != 1 ~ "undetermined", .default = emotion)) |>

select(file, emotion) |>

distinct() |>

# label the method

mutate(method = "confidence score")Frame Score

Identify the emotion recognised in each frame (max value) and to count how many time each have been recognised in a video

df_score_frame <- df_tidy_long |>

group_by(file, frame) |> # in each file, for each frame, find the highest value

slice_max(recognition) |>

add_count(name = "n_frame") |> # in case of ties, label the emotions "undetermined" and remove duplicates

mutate(emotion = case_when(n_frame != 1 ~ "undetermined", .default = emotion)) |>

select(file, frame, emotion) |>

distinct() |>

group_by(file, emotion) |> # count the occurrence of each emotion across all frames and select highest

count() |>

group_by(file) |>

slice_max(n) |>

add_count(name = "n_file") |> # in case of ties, label the emotions "undetermined" and remove duplicates

mutate(emotion = case_when(n_file != 1 ~ "undetermined", .default = emotion)) |>

select(file, emotion) |>

distinct() |>

mutate(method = "frame score") # label the methodComparing Scores

Now a label has been assigned to each recorded video using 3 different calculation methods, we can compare these score with the “ground truth” (i.e., the type of emotion supposedly elicited).

df_congruency <-

bind_rows(

df_score_matching,

df_score_confidence,

df_score_frame

) |>

separate(col = file, into = c("ppt", "instruction"), sep = "_", remove = FALSE) |>

mutate(

instruction = instruction |>

tolower() |>

str_replace_all(c("happy" = "happiness", "sad" = "sadness", "angry" = "anger")),

congruency = if_else(instruction == emotion, 1, 0)

)Comparing Scores

A Generalised Linear Model using a binomial distribution of the residuals (logistic regression) is fitted to identify the effects of calculation methods, instruction tasks, and the interaction between both.

To obtain their omnibus effect estimates, an analysis of variance is used on the GLM model.

🛠️ Now, it’s Your Turn!

- Open “3_model.R” in the

scripts/folder - Select all lines and click Run (or use keyboard shortcuts)

Warning

The code in the script “1_import_tidy_transform.R” must have been ran before running the code in the script “3_model.R”.

02:00

4. Communicate

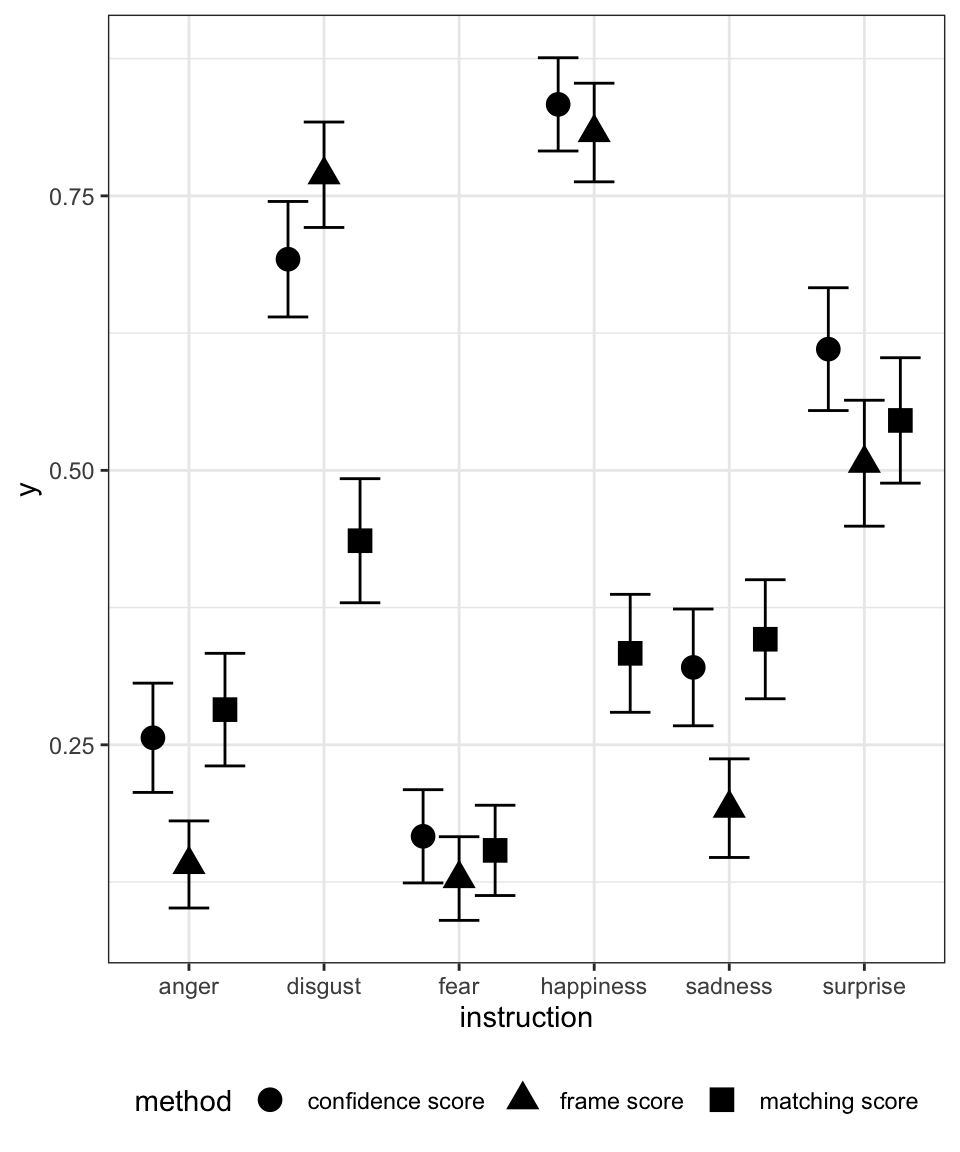

Effects Visualisation

Let’s calculate the average congruency by instruction task and by method with a basic visualisation:

df_congruency |>

group_by(method, instruction) |>

summarise(mean_se(congruency)) |>

ggplot() +

aes(

x = instruction,

y = y,

ymin = ymin,

ymax = ymax,

fill = method,

shape = method

) +

geom_errorbar(position = position_dodge(width = 0.8)) +

geom_point(

size = 4,

position = position_dodge(width = 0.8)

) +

theme_bw() +

theme(legend.position = "bottom")

Effects Visualisation

Here is the same visualisation but with more customisations:

df_congruency |>

group_by(method, instruction) |>

summarise(mean_se(congruency)) |>

ggplot() +

aes(

x = fct_reorder(instruction, y, .fun = "mean"),

y = y,

ymin = ymin,

ymax = ymax,

fill = method,

shape = method

) +

ggstats::geom_stripped_cols() +

geom_errorbar(width = 0, position = position_dodge(width = 0.8)) +

geom_point(stroke = 0, size = 4, position = position_dodge(width = 0.8)) +

scale_y_continuous("Congruence between instruction and recognition", limits = c(0, 1), labels = scales::percent) +

scale_x_discrete("") +

scale_fill_brewer("Method", palette = "Dark2") +

scale_shape_manual("Method", values = c(21, 22, 23, 24)) +

guides(

shape = guide_legend(reverse = TRUE, position = "inside"),

fill = guide_legend(reverse = TRUE, position = "inside")

) +

theme_bw() +

theme(

text = element_text(size = 12),

axis.text.x = element_text(size = 14),

axis.text.y = element_text(size = 14),

axis.line.y = element_blank(),

legend.title = element_text(hjust = 0.5),

legend.position.inside = c(0.8, 0.2),

legend.background = element_rect(fill = "grey80")

) +

coord_flip(ylim = c(0, 1))

Effects Statistics

The ANOVA (formula: congruency ~ method * instruction) suggests that:

- The main effect of method is statistically significant and small (F(2, 1383) = 10.54, p < .001; Eta2 (partial) = 0.02, 95% CI [5.64e-03, 1.00])

- The main effect of instruction is statistically significant and large (F(5, 1383) = 61.00, p < .001; Eta2 (partial) = 0.18, 95% CI [0.15, 1.00])

- The interaction between method and instruction is statistically significant and small (F(10, 1383) = 8.16, p < .001; Eta2 (partial) = 0.06, 95% CI [0.03, 1.00])

Effect sizes were labelled following Field’s (2013) recommendations.

🛠️ Now, it’s Your Turn!

- Open “4_communicate.R” in the

scripts/folder - Select all lines and click Run (or use keyboard shortcuts)

Warning

The code in the scripts “1_import_tidy_transform.R” and “3_model.R” must have been ran before running the code in the script “4_communicate.R”.

02:00

5. Discussion and Conclusion

On Technology

- {Tidyverse} and the native

|>pipe operator make code more readable and teachable - Increased scientific transparency and reproducibility

- Being open-source encourages improved practices and sharing methods

- Easier to spot mistakes in the data processing

On Theory

- Each participant was instructed by a psychologist to gradually portray the six basic emotions in distinct sequences, their expression are not genuine

- Low congruence scores does not necessarily indicate an issue with the participants, it could be due to recognition system limitations

On Methods

All three methods use a relative indicator rather than an absolute threshold to identify the emotion recognised:

- A low score might still be the highest and thus chosen

- A minimum threshold should be introduced for more valid recognition

Method performance differs:

- Matching Score struggles with prolonged expressions like happiness and disgust

- Frame Score underperforms for surprise, sadness, anger, and fear

- Confidence Score, based on average values, appears the most robust method overall

Thanks for your attention and don’t hesitate to ask if you have any questions!

@damien_dupre

@damien-dupre

https://damien-dupre.github.io

damien.dupre@dcu.ie

Damien Dupré - CERE2025